Understanding the necessary research tools is essential when establishing an effective online presence. These tools assist you in determining which pages of your website caught the interest of search engines, thereby increasing the visibility of your entire site and attracting more visitors.

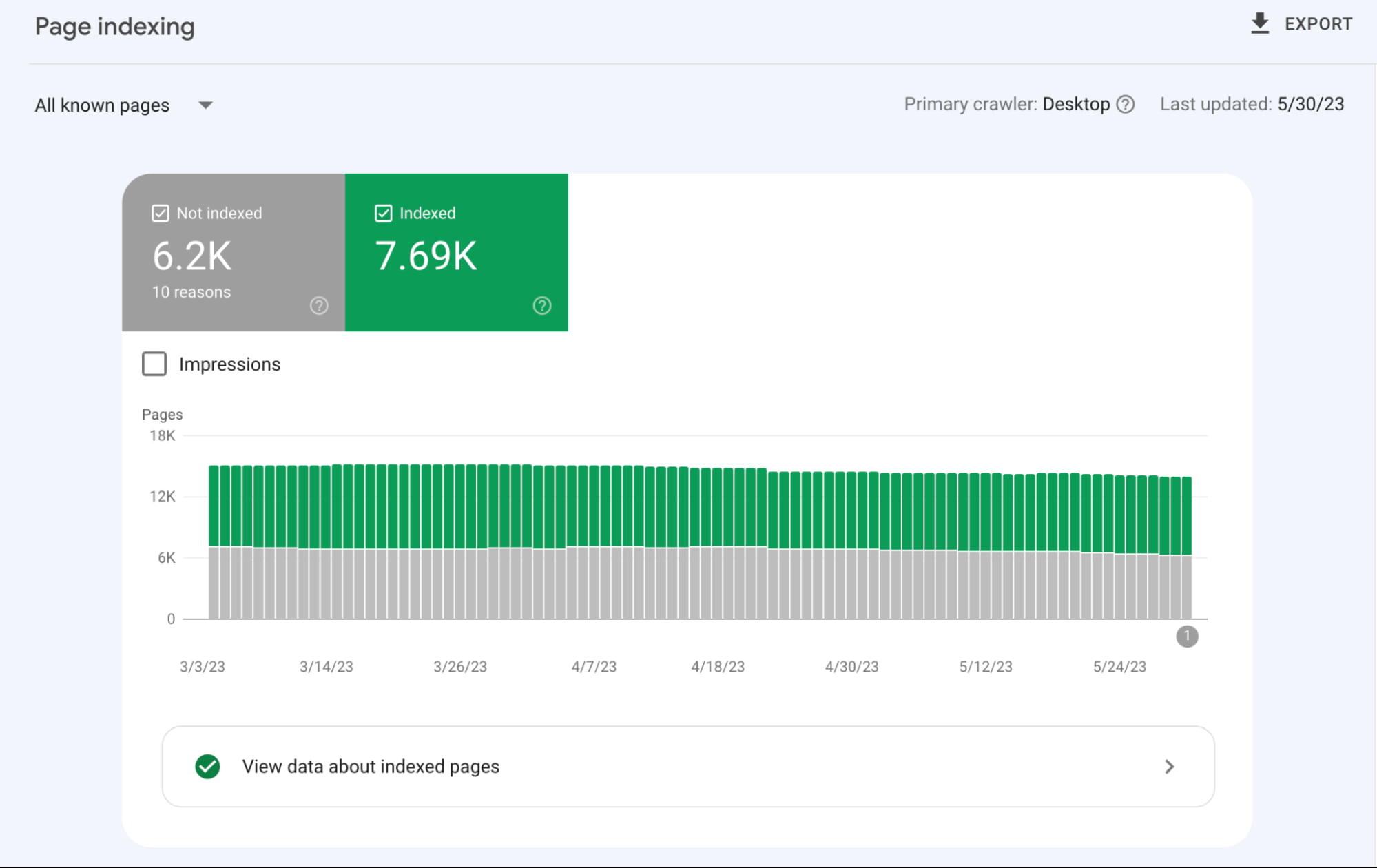

In this article, we aim to demystify the Google Search Console Page Indexing Report, equipping you with the necessary tools to learn the status of your pages and optimize your website’s visibility. From understanding indexed pages to resolving issues such as blocked URLs and duplicate content, we will delve into each aspect to help you overcome any obstacles.

How to access the Page Indexing Report?

To access the Page Indexing Report:

- Log on to Google Search Console.

- Choose a property.

- Click Pages under Indexing in the left navigation.

The report defines four status categories:

- Valid: pages that have been indexed.

- Valid with warnings: pages that have been indexed but contain some issues you may want to look at.

- Excluded: pages that weren’t indexed because search engines picked up clear signals they shouldn’t index them.

- Error: pages that couldn’t be indexed for some reason.

Each status consists of one or more types. Let’s go through each of them.

Valid URLs

As mentioned above,” valid URLs” are pages that have been indexed. The following two types fall within the “Valid” status:

- Submitted and indexed: These URLs were submitted through an XML sitemap and subsequently indexed.

- Indexed, not submitted in sitemap: These URLs were not submitted through an XML sitemap, but Google found and indexed them anyway. Action required: verify if these URLs need to be indexed, and if so, add them to your XML sitemap. If not, implement the robots noindex directive and optionally exclude them in your robots.txt if they can cause crawl budget issues.

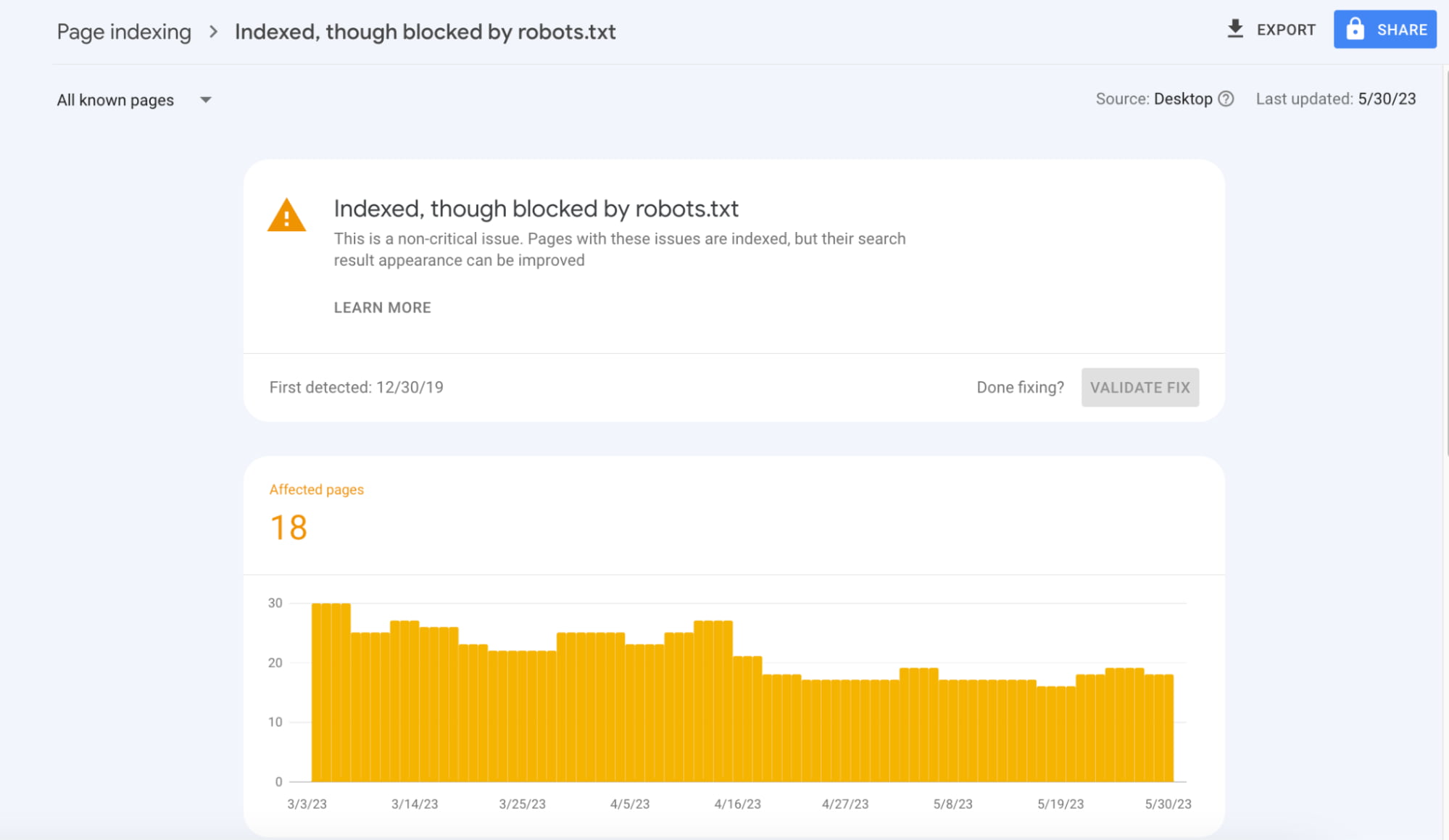

Valid URLs with warnings

The “Valid with warnings” status only contains two types:

- Indexed, though blocked by robots.txt: This warning indicates that search engines have indexed the URL, but it is also blocked from being crawled due to instructions in the website’s robots.txt file. While the URL may still appear in search results, search engines cannot access its content.

- Indexed, though marked ‘noindex’: This warning suggests that search engines have indexed the URL, but it has also been marked with a ‘noindex’ tag. This means that even though the URL may appear in search results, search engines will not include it in their index, potentially impacting its visibility to users.

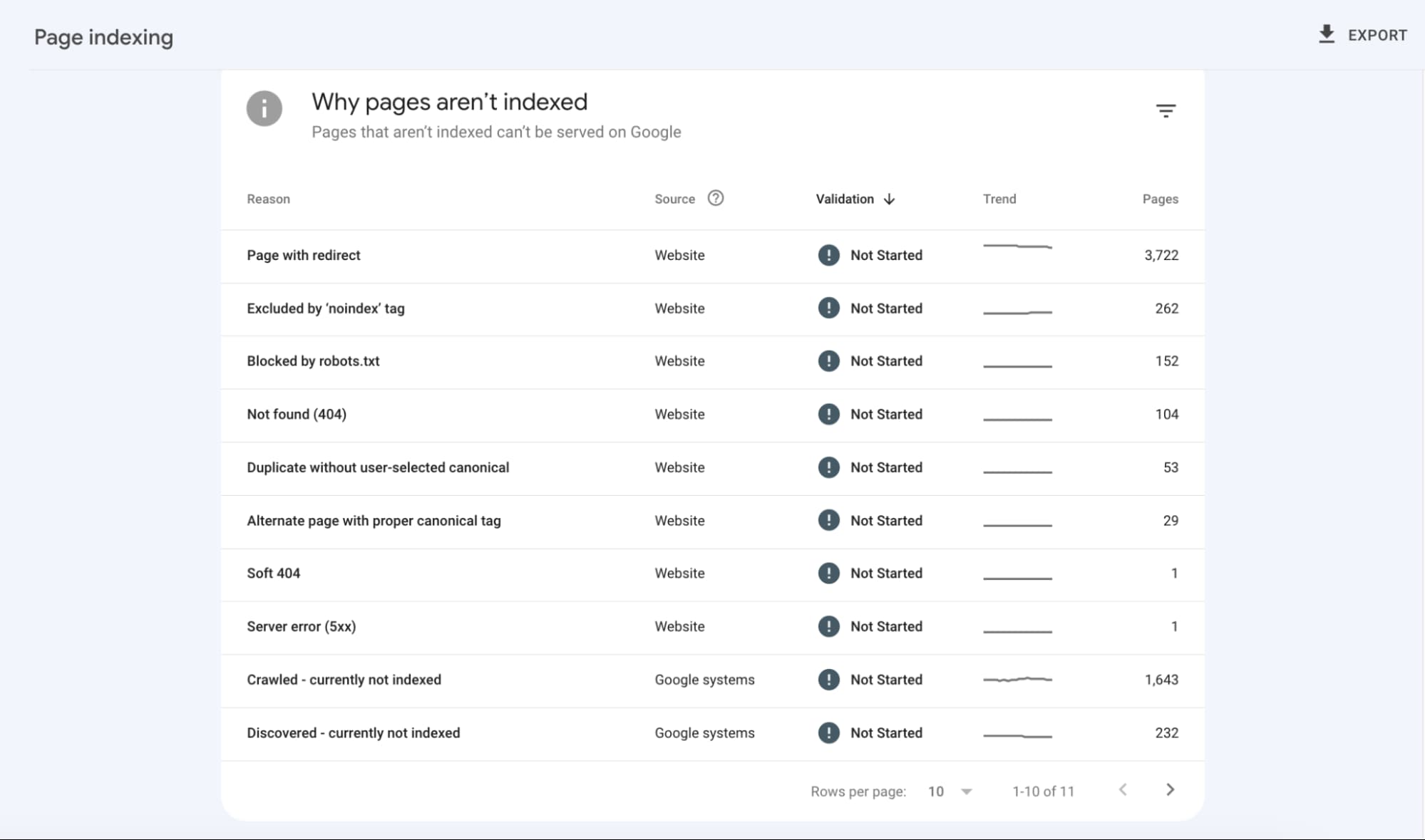

Excluded URLs

The “Excluded” status contains the following types:

- Alternate page with the proper canonical tag: This URL is an alternate version of another page and has been properly marked with a canonical tag, indicating that the original page should be considered the primary version by search engines.

- Blocked by page removal tool: This URL has been blocked from indexing using a page removal tool. This typically means the website owner or administrator has requested to remove this page from search engine results.

- Blocked by robots.txt: This URL is blocked from being indexed by search engine crawlers due to instructions in the website’s robots.txt file. The robots.txt file is used to control which parts of a website can be accessed by search engines.

- Blocked due to access forbidden (403): This URL is blocked from being accessed by search engine crawlers because it returns a 403 Forbidden status code. This usually indicates that the server denies access to the page for some reason, such as permission restrictions.

- Blocked due to other 4xx issue: This URL is blocked from being indexed due to another 4xx status code issue. The specific reason for the block is not specified, but it could be due to various issues, such as a broken link, a missing page, or an invalid request.

- Blocked due to unauthorized request (401): This URL is blocked from being indexed because it requires authentication or authorization. A 401 Unauthorized status code is returned when the user requesting the page is not authenticated or does not have the necessary permissions to access it.

- Crawl anomaly: This URL has encountered a crawl anomaly, which means that there was an unexpected issue or behavior encountered during the crawling process. The specific reason for the anomaly is not provided in the description.

- Crawled – currently not indexed: This URL has been crawled by search engine bots but is currently not indexed in the search engine’s database. The reasons for not indexing it are not specified in the description.

- Discovered – currently not indexed: This URL has been discovered by search engine bots but is now not indexed. The reasons for not indexing it are not specified in the description.

- Duplicate without user-selected canonical: This URL is a duplicate of another page on the website, but the user has not set a canonical tag specifying the preferred version. This can lead to confusion for search engines in determining the primary version of the content.

- Duplicate, Google chose a different canonical than the user: This URL is a duplicate of another page, and although the user has specified a canonical tag, Google has chosen a different canonical version of the content. This means that Google has determined a different page as the preferred version to be indexed.

- Duplicate, submitted URL not selected as canonical: This URL is a duplicate of another page, and the user has specified a canonical tag; however, the submitted URL is not selected as the canonical version. This means that search engines do not recognize the user’s specified canonical URL.

- Excluded by ‘noindex’ tag: This URL has been excluded from indexing by search engines because it contains a ‘noindex’ tag. The ‘noindex’ tag is used to instruct search engine bots not to include the page in their index.

- Not found (404): This URL returns a 404 Not Found status code, indicating that the page could not be found on the server. As a result, it is not indexed by search engines.

- Page removed because of legal complaint: This URL has been removed from search engine results due to a legal complaint. The content on this page likely violated some legal requirements or infringed upon intellectual property rights.

- Page with redirect: This URL serves as a redirect to another page. When search engine bots encounter this URL, they are redirected to a different URL specified in the redirect. As a result, the content of this URL may not be indexed separately, and search engine users will be directed to the destination page instead.

- Soft 404: These URLs are considered soft 404 responses, meaning that the URLs don’t return a HTTP status code 404, but the content gives the impression that it is, in fact, a 404 page, for instance, by showing “Page can’t be found” message. Alternatively, these errors can result from redirects pointing to pages that are considered irrelevant enough by Google.

Error URLs

The “Error” status contains the following types:

- Redirect error: This error occurs when there is an issue with a redirect. It indicates that the submitted URL encountered a problem while redirecting to another page.

- Server error (5xx): This error indicates that the server encountered an internal error while processing the submitted URL. Server errors in the 5xx range typically suggest temporary issues or problems with the server configuration.

- Submitted URL blocked by robots.txt: This error occurs when the submitted URL is blocked from crawling by search engine bots due to instructions specified in the website’s robots.txt file.

- Submitted URL blocked due to other 4xx issue: This error indicates that the submitted URL is blocked from being accessed or crawled due to another issue related to a 4xx status code. The specific reason for the block is not provided in the error description.

- Submitted URL has a crawl issue: This error suggests that the submitted URL encountered a problem during the crawling process. It could be due to various issues such as timeouts, connectivity problems, or other technical difficulties preventing the successful crawling of the URL.

- Submitted URL marked ‘noindex’: This error indicates that the submitted URL has been marked with a ‘noindex’ tag, instructing search engines not to include the page in their index. As a result, the URL will not appear in search engine results.

- Submitted URL not found (404): This error occurs when the submitted URL returns a 404 Not Found status code. It indicates that the page could not be found on the server and is not accessible.

- Submitted URL seems to be a Soft 404: This error suggests that the submitted URL appears to be a soft 404. Soft 404 errors occur when a page returns a 200 OK status code (indicating that the page exists) but displays content that resembles a typical 404 error page.

- Submitted URL returned 403: This error indicates that the submitted URL returned a 403 Forbidden status code. It means the server denied access to the page, usually due to permission restrictions or authentication requirements.

- Submitted URL returns unauthorized request (401): This error occurs when the submitted URL returns a 401 Unauthorized status code. It indicates that the user making the request is not authenticated or lacks the necessary credentials to access the page.

Conclusion

Understanding and utilizing the Page Indexing Report in Google Search Console ensures your website’s pages are correctly indexed and visible in search results. By staying on top of indexing issues and taking appropriate actions, you can maximize the visibility and reach of your website, driving more organic traffic and achieving your online goals.